Least Squares Regression Line

What is the Least Squares Regression method and why use it. The least squares method is a form of mathematical regression analysis that finds the line of best fit for a dataset providing a visual demonstration of the relationship.

How To Calculate Least Squares Regression Line Regression Calculator Square

Points near the line get full weight.

. Its called a least squares because the best line of fit is one that minimizes the variance the sum of squares of the errors. Im sure most of us have experience in drawing lines of best fit where we line up a ruler think this seems about right and draw some lines from the X to the Y axis. Points farther from the line get reduced.

Least squares regression line example. Our free online linear regression calculator gives step by step calculations of any regression analysis. It is a generalization of Deming regression and also of orthogonal regression and can be applied to both linear and non-linear models.

The assumption that the random errors have constant variance is not implicit to weighted least-squares regression. In the case of one independent variable it is called simple linear regression. If you know a bit about NIR spectroscopy you sure know very.

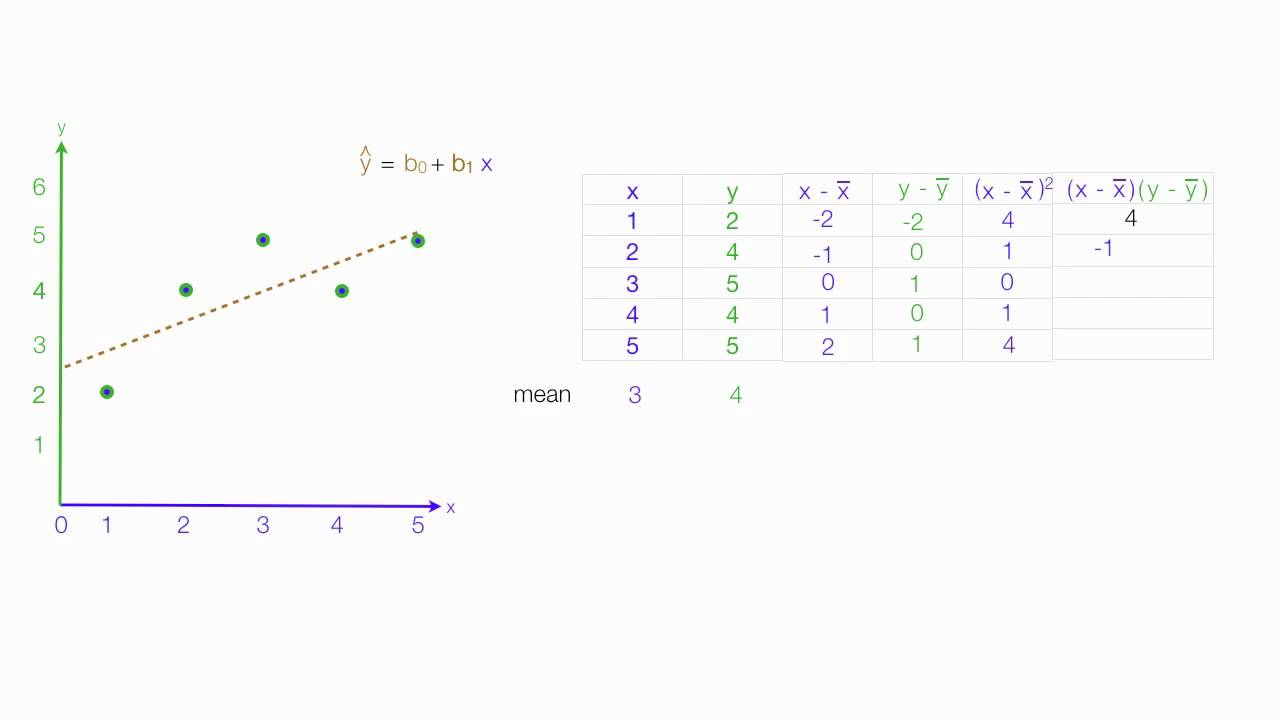

The name of the least squares line explains what it does. If the relationship between two variables appears to be linear then a straight line can be fit to the data in order to model the relationship. The slope β 1 of the least squares regression line estimates the size and direction of the mean change in the dependent variable y when the independent variable x is increased by one unit.

The least squares regression line is one such line through our data points. The magic lies in the way of working out the parameters a and b. It helps us predict results based on an existing set of data as well as clear anomalies in our data.

Minimizing the sum of the squares of the differences between the observed dependent variable values of the variable. PLS acronym of Partial Least Squares is a widespread regression technique used to analyse near-infrared spectroscopy data. The method of least squares is a standard approach in regression analysis to approximate the solution of overdetermined systems sets of equations in which there are more equations than unknowns by minimizing the sum of the squares of the residuals a residual being the difference between an observed value and the fitted value provided by a model made in the results of.

It is a set of formulations for solving statistical problems involved in linear regression including variants for ordinary unweighted weighted and generalized correlated residuals. Least Squares Regression Line of Best Fit. So what does the least squares really mean.

The slope hatbeta _1 of the least squares regression line estimates the size and direction of the mean change in the dependent variable y when the independent variable x is increased by. For more than one independent variable the process is called mulitple linear regression. Its slope and y-intercept are computed from the data using formulas.

The least squares regression line is the line that best fits the data. We start with a collection of points with coordinates given by x i y i. In applied statistics total least squares is a type of errors-in-variables regression a least squares data modeling technique in which observational errors on both dependent and independent variables are taken into account.

Least Squares Method. As long as your model satisfies the OLS assumptions for linear regression you can rest easy knowing that youre getting the best possible estimates. The least squares regression line is the line that best fits the data.

It applies the method of least squares to fit a line through your data points. Read more is a straight line drawn through a scatter of data points that best represents the relationship between them. Suppose we wanted to estimate a score for someone who had spent exactly 23 hours on an essay.

The main purpose is to provide an example of the basic commands. In a room full of people youll. Ordinary Least Squares OLS linear regression is a statistical technique used for the analysis and modelling of linear relationships between a response variable and one or more predictor variables.

In statistics ordinary least squares OLS is a type of linear least squares method for estimating the unknown parameters in a linear regression model. Let us consider the following graph wherein a set of data is plotted along the x and y-axis. Line of Best Fit in the Least Square Regression.

In statistics linear regression is a linear approach to modelling the relationship between a dependent variable and one or more independent variables. Instead it is assumed that the weights provided in the fitting procedure correctly indicate the differing levels of quality present in the data. The graphical plot of linear regression line is as follows.

Y a x b. Specifying the value of the cv attribute will trigger the use of cross-validation with GridSearchCV for example cv10 for 10-fold cross-validation rather than Leave-One-Out Cross-Validation. Find the least squares regression line for the data set as follows.

The partial least squares regression is the extension of the PCR method which does not suffer from the mentioned deficiency. Least squares is a method to apply linear regression. The reader can download the line-spacing measurement data as a text file Model Types and Weighted Least Squares.

The Least Squares Regression Line is the line that makes the vertical distance from the data points to the regression line as small as possible. Today we are going to present a worked example of Partial Least Squares Regression in Python on real world NIR data. Hi everyone and thanks for stopping by.

We also include the r-square statistic as a measure of goodness of fit. References Notes on Regularized Least Squares Rifkin Lippert technical report course slides113. We can place the line by eye.

The underlying calculations and output are consistent with most statistics packages. Linear least squares LLS is the least squares approximation of linear functions to data. Numerical methods for linear least squares include inverting the matrix of the normal equations and orthogonal.

The formula for the line of the best fit with least squares estimation is then. Least-angle regression is an estimation procedure for linear regression models that was developed to handle high-dimensional covariate vectors potentially with more covariates than observations. The Lasso is a linear model that estimates sparse coefficients.

Try to have the line as close as possible to all points and a similar number of points above and below the line. Regression is a powerful analysis that can analyze multiple variables simultaneously to answer. Any straight line will pass among these points and will either go above or below each of these.

The line of best fit Line Of Best Fit The line of best fit is a mathematical concept that correlates points scattered across a graph. Linear Least Squares Regression Here we look at the most basic linear least squares regression. Its slope and y-intercept are computed from the data using formulas.

OLS chooses the parameters of a linear function of a set of explanatory variables by the principle of least squares. 2 9 5 7 8 8 9 2. Ordinary Least Squares OLS is the most common estimation method for linear modelsand thats true for a good reason.

Anomalies are values that are too good or bad to be true or that represent rare cases. It is assumed that you know how to enter data or read data files which is covered in the first chapter and it is assumed that you are familiar with the different data types. The equation of the regression line is calculated including the slope of the regression line and the intercept.

Also work for the estimated value of y for the value of X to be 2. Imagine you have some points and want to have a line that best fits them like this. Unlike linear and nonlinear least squares regression weighted least squares regression is not associated with a particular type of function used to describe the relationship between the process variables.

As you can see the least square regression line equation is no different that the standard expression for linear dependency. This can be a bit hard to visualize but the main point is you are.

How To Calculate Linear Regression Using Least Square Method

Least Squares Linear Regression Using Statcrunch 4 2 Linear Regression Regression Homework Help

Least Squares Regression Analysis Can Help Projects Statistics Math Regression Analysis Machine Learning Deep Learning

Y A Bx Stats Chapter 5 Least Squares Regression Linear Relationships Regression High School Math

No comments for "Least Squares Regression Line"

Post a Comment